Implementation details of Java-based Fedora Ingestion code

Implementation Phase

(Return to MediaWiki_based_ingest_tool)

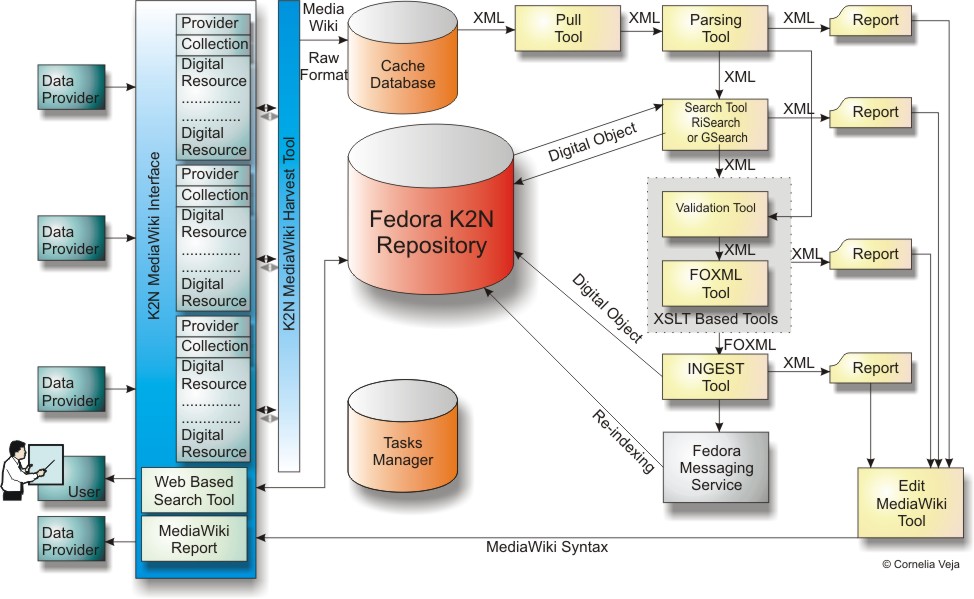

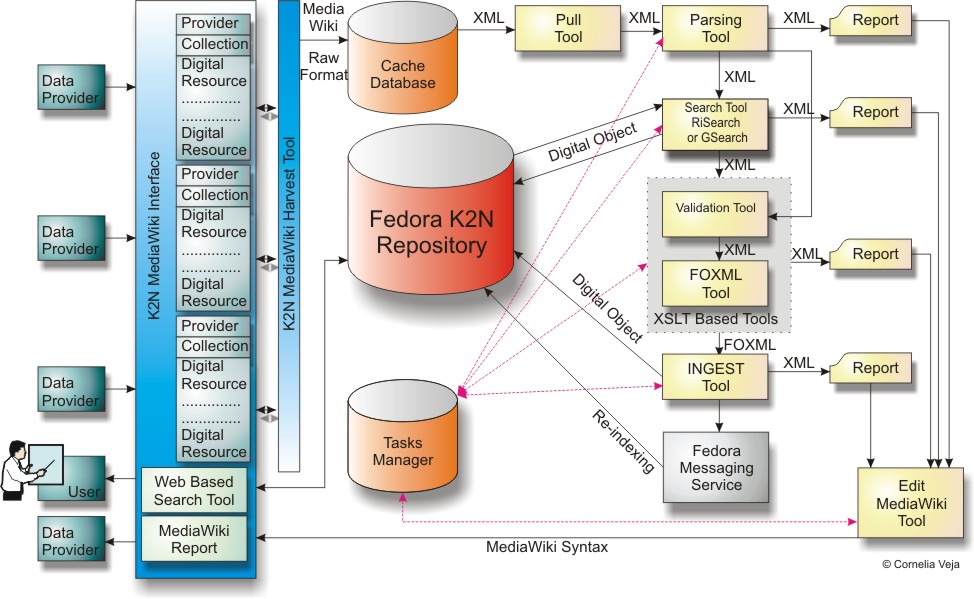

Harvest Tool

The harvest tool will recurrently poll the MediaWiki address looking for new online medatada since the last successful query. Actually, this tool uses the main configuration application file as input file. Current application's instance will get the harvested source parameter and will query against the API exposed by K2N Harvest Tool.

http://fedora.keytonature.net/{wikiparameter}/index.php? title=Special:TemplateParameterIndex&action=send&do=export&template=Metadata&returnpar=BaseURL;Collection_Page;Modified

Exists 3 cases:

- no new metadata,then application will exit;

- harvest tool brings new metadata resource collections;

- new collections metadata, but if Modified it's the same like the last successfully ingestion for a certain collection, then application skips this collection and sought for the next available collection.

All new metadata collections will be stored in a work database, as jobs to do. See also Implementation_details_of_Java-based_Fedora_Ingestion_code#Task_management.

Parsing Tool

This parsing tool downloads new metadata and performs a simple syntactical analysis in order to discover lacks in metadata. In this stage, a simple transformation of metadata would be necessary. A good example is translation between Infobox Organization wiki template element names, used for providers, and K2N metadata in respect with Resource Metadata Exchange Agreement.

Example from Slovenian Museum of Natural History (PMSL):

{{Infobox Organisation

| name = Slovenian Museum<BR>of Natural History, Ljubljana

| logo = [[Image:PMSL.jpg|230px]]

| metadata language = EN

| abbreviation = PMSL

| type = Governmental research organization

| foundation = 1821

| location_country = Slovenia

| location_city = Ljubljana

| homepage = [http://www2.pms-lj.si/pmsgb.html Slovenian Museum of Natural History]

| Wikipedia page = [http://en.wikipedia.org/wiki/Slovenian_Museum_of_Natural_History Slovenian Museum of Natural History]

}}

{{Infobox Organisation

| name = Prirodoslovni muzej Slovenije, Ljubljana

| logo = [[Image:PMSL.jpg|230px]]

| metadata language = SI

| abbreviation = PMSL

| type = Javni zavod

| foundation = 1821

| location_country = Slovenija

| location_city = Ljubljana

| homepage = [http://www2.pms-lj.si/ Prirodoslovni muzej Slovenije]

| Wikipedia page = [http://en.wikipedia.org/wiki/Slovenian_Museum_of_Natural_History Slovenian Museum of Natural History]

}}

Moreover, in this phase, for providers that uses two descriptions, in two languages, a compacting service will compact the metadata and prepares this elements for ingest. This operations is necessary taking in consideration a further back reference from the collection to the provider. So, PMSL provider is described in two languages: English and Slovenian. For Fedora ingest, Parsing Tool prepares the mapping as follows:

<dc:title xml:lang="en">PMSL</dc:title> <dc:title xml:lang="si">PMSL</dc:title> <k2n:Description xml:lang="en">Slovenian Museum of Natural History, Ljubljana</k2n:Description> <k2n:Description xml:lang="si">Prirodoslovni muzej Slovenije, Ljubljana</k2n:Description> <k2n:Caption xml:lang="en">Governmental research organization</k2n:Caption> <k2n:Caption xml:lang="si">Javni zavod</k2n:Caption> <k2n:Best_Quality_URI>http://www.keytonature.eu/w/Slovenian_Museum_of_Natural_History_(PMSL)</k2n:Best_Quality_URI>

See also: Definition_specs_for_Fedora_Ingestion_Service#Mapping_between_Wiki_Provider_records_and_Fedora

PID Generator

A PID Generator, to establish the object identifier based on the MD5 checksum algorithm, calculated against some metadata prepared for ingestion as follows:

- For the last version of Fedora Ingest Service, the PID for provider will be as follows:

"K2N" namespace+"Provider_"+MD5-hash applied to BaseURL+PageName, where BaseURI represent MediaWiki URI base for provider's MediaWiki page (PageName). This algorithm is meant to ensure the PID persistence over time and oneness as well.

- For the latest version, will consider for collection resources as follows:

K2N:namespace+"Collection_"+MD5-hash applied to BaseURL+Collection_Page. If the collection has a ResourceID already designated by provider, MD5-hash will applied to ResourceID+BaseURL+Collection_Page.

- For collection member objects, the latest version of Fedora Ingest Service will consider PID following the hereinbefore mode:

"K2N:"+x+"_"+MD5-hash applied to ResourceID(if exists)+Collection_Page+BaseURL+Provider_Page. If Resource_ID is not provided, Best_Quality_URI will be used instead.

Search Tool

A Search Tool is looking for already ingested objects. This tool is based on SOAP clients (Fedora API-A, API-M and GSearch SOAP Client).

Using SPARQL and GSearch for Collection Retrieving

When a collection page is updated or deleted in mediawiki, a previous search against K2N Fedora Commons Repository, is needed, in order to determine if this collection was already ingested or not. At the technical level, exists two options to accomplish this:

- 1. GSearch was pick up to accomplish this operation, as a first option. Nevertheless, GSearch is a little lazy in searching against relation external, and too verbose in results. And, if , by mistake, the repository are not indexed, GSearch never find the collection and collection's members. This is slightly unpleasant, because currently in repository exists old version of these collections, as results of the second survey metadata aggregation process.

A query example using GSearch engine:

Search time with GSearch begin at: 2009-09-23 16:02:27

Query: k2nrelation.isMemberOf:"info:fedora/K2N:Collection_G_81" AND k2nrelation.serviceProvidedBy:"info:fedora/K2N:Provider_G_5" PIDS: K2N:IdentificationTool_G_589 PIDS: K2N:IdentificationTool_G_590 PIDS: K2N:IdentificationTool_G_591 PIDS: K2N:IdentificationTool_G_592 PIDS: K2N:IdentificationTool_G_593 PIDS: K2N:IdentificationTool_G_594 PIDS: K2N:IdentificationTool_G_595 PIDS: K2N:IdentificationTool_G_596 PIDS: K2N:IdentificationTool_G_597 PIDS: K2N:IdentificationTool_G_598 PIDS: K2N:IdentificationTool_G_599 .................................. PIDS: K2N:IdentificationTool_G_618 Search time with GSearch end at: 2009-09-23 16:02:32

- 2. SPARQL, the query language on the semantic web, as the second option. The results are expressed in SPARQL too.

The following query return all members for a specified collection (@coll@) and provider (@prov@):

select ?x from <#ri>

where {

?x <fedora-rels-ext:isMemberOf> <fedora:@coll@> .

?x <fedora-rels-ext:serviceProvidedBy> <fedora:@prov@> .

}

where "fedora-rels-ext" represents alias for "info:fedora/fedora-system:def/relations-external#" and "fedora" represents alias for "info:fedora/". The result, expressed in SPARQL looks as follows:

<sparql>

<head>

<variable name="x"/>

</head>

<results>

<result>

<x uri="info:fedora/K2N:StillImage_G_94288"/>

</result>

<result>

<x uri="info:fedora/K2N:StillImage_G_94289"/>

</result>

<result>

<x uri="info:fedora/K2N:StillImage_G_94290"/>

</result>

.....

</results>

</sparql>

During the tests, the second method was proved to be more reliable and rapid that the first one and the result is less verbose then in the GSearch usage. But we still keep both methods, and the effective usage of one or other method could be configured by setting a parameter in the main application configuration file.

- Observation. ASK query return "false" all the time. This was reported as bug in Fedora Commons Developers Community.

Validation and FOXML Generator Tool

This page describe the Validation and FOXML generator tool, destinated to preparse FOXML files for ingest and establish the relationships between digital objects.

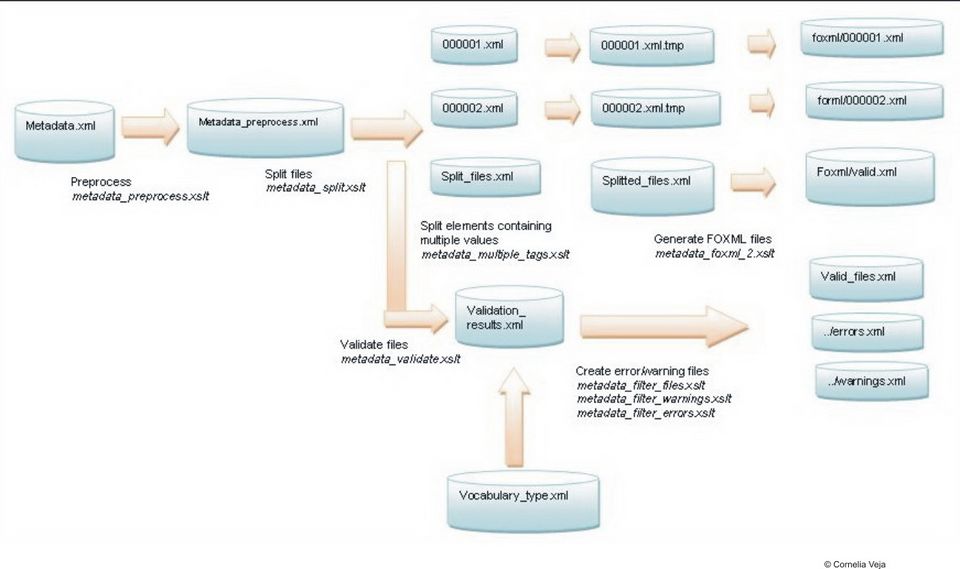

XSLT Transformation and Validation Process Description

- Steps:

- Preprocess - takes the metadata file and applies some preprocessing (converts content of Language, Metadata_Language, Country_Codes elements to lower case);

- Split files - splits the files containing several metadata sets into several smaller files (000001.xml, ...) , each file containing one metadata set;

- Validate files - takes each small file and validates it and produces an xml file containing the validation report. In this stage, it could be used a Vocabulary, defined for all metadata that could have a controlled vocabulary. This vocabulary will be defined separatelly, for each metadata which can require limited and exact values. For each metadata element, a separate xml file named: metadata_name.vocabulary.xml should be used.

- Create error/warning files – takes the validation report file and creates two xml files: errors.xml and warnings.xml + an xml file valid_files.xml containing the list of valid files that can be processed further (didn’t contain any errors).

- Split elements containing multiple values - splits the elements containing multiple values into an several element for each value;

- Generate FOXML files – generates the foxml files from the xml files.

- At the end of this process, all the intermediate xml files will be deleted, and Java Garbage collector is explicit called in order to clean up the memory. On the work directory will remain the FOXML files prepared for ingest and xml report files.

As a technical solution, this tool uses Xalan-java 2.7.1 XSLT processor.

This tool could be called as standalone too, using as input parameters: the path to the temporary work directory with xml files and the path to Vocabulary directory, if needs.

Effective Ingest Tool

The main operation is done by Effective Ingest tool. The FOXML files prepared for ingestion in the previous steps, are ingested effective. During this operation, Task Manager will monitoring all the activity. Function of the collection's members count, Task manager calculates a proximate ingest time. More, for a large collection, it creates restart points for the ingest operation. So, the ingest will be done by batches formed by 1000 digital objects. The output of this operation is a result xml report containing a statistics for ingest: how many objects were ingested successfully, how many objects were overwritten and the time during this operation.

As a optional implementation feature, at the end of ingest, the working directory might be deleted. During the tests, this option was disabled.

This service could be called as standalone service, with input parameters: the path for working directory in which FOXML files are stored and the restart point from which the ingest would be restarted.

Technically, Effective Ingest tool is a SOAP API-M client for Fedora Commons API. The SOAP API-A is used also in order to seek first if the digital object is already in repository. If an old version of this object is found, this object is first deleted, and after that a request for ingest is fired. This step represent a second check for an old object existence. The purpose is: if a previous ingest operation unsuccessfully completed, and the relationship with collection and providers have been broken, though current ingest has an other change to complete successfully.

This step is strongly tied to Task Manager. See bellow the Task Management section at Implementation_details_of_Java-based_Fedora_Ingestion_code#Task_management.

MediaWiki Report Tool

MediaWiki editing tool is created to write messages for metadata providers. The outcome consists of MediaWiki pages, linked to existing collection pages. During the entire work flow, messages as warnings, errors, statistics results of ingest are collected in xml files. MediaWiki Report tool gathers this messages and create automatic a mediawiki page. This page will be tied to the template collection page from which the metadata are originally collected. This is considered the 5th step in the entire work flow.

From technically point of view, this tool use Bliki engine, as a Java extension API for MediaWiki, under Eclipse Public License - v 1.0. See also Bliki engine.

MediaWiki editing tool can be called as standalone application, having as input parameters the main properties configuration file and the original path for the collection working directory.

Workflow

- Metadata providers insert metadata in the K2N MediaWiki, using a template;

- Metadata are pulled from MediaWiki in a cache database;

- The ingest tool is "new metadata sensitive", ingests this new metadata and creates new Fedora digital objects;

- The GSearch indexing service is triggered via Fedora’s messaging service and adds the new digital objects to it’s indexes;

- If existing, the messages for providers could be shown on a page with the following path: Collection/Metadata_Aggregation_Report;

- The search tool, based on the GSearch engine, is able to retrieve new digital objects after every new ingest.

Implementation restrictions

- Only one task which perform thumbnail generation will be active in time;

- No more then 3 tasks will be active in the same time. Presuming that one task perform thumbs generation, only 2 tasks will perform ingestion in the same time.

Task management

Rely on task scheduled, the application could have many instances in the same time. Actually, those instances are limited to 3. In order to accomplish this, running task are managed by a central dispatcher application which fired the appropriate application, following definition specs. See also Definition_specs_for_Fedora_Ingestion_Service. The application considers that exists three kind of activities (jobs to do):

- Thumbnail generation;

- Collection for ingestion;

- Other collection for ingestion, ask the TemplateParameterIndexer for more collection for ingest.

Usually, a work session is carried on as following:

- the task scheduled fire the application. See Implementation_details_of_Java-based_Fedora_Ingestion_code#Implementation_restrictions above.

- application reads from work database looking for a waiting job to do.

If exists thumbnail generation job?

then:

fire Thumbnail Generation job;

else If exists collection for ingestion?

then:

fire Effective Ingestion job;

else Download other Collection from Mediawiki if exists.

- the job in database in assigned to a task;

- job is activated using codes for every operation. For every operation, in database is written the date for operation's end. More, for ingestion job, in database are written: the proximate processing time, digital objects amount and the last restart point, if needs.

- job runs and write in database after every operation which successful completed and writes it in a success table. The job is deleted from regular table with jobs to do only if it not contain images. For images, the task is marked for thumbnail generator and remain into "to do jobs" list.

- if the job finished with failure, the task detaches the job and let the job in a initial stage. So, a further task can try to complete the job. Here is necessary an counter which could count the retry amount, but is not implemented yet.

- the task itself exit successfully.

For implementation details, see also Implementation_details_of_Java-based_Fedora_Ingestion_code/Technical_documentation.

Thumbnail Generator

For the collections that contains images, a further task instance will try to generate thumbnails if this is necessary. This step makes easier the searching tool job MediaIBIS when is looking in repository. Not all the images have been provided with thumbs, so this task is accomplish by Thumbnail Generator. This tool is looking in Working database for images that have not been provided with thumbnails. If exists, a second thread will be fired and it will generate image's thumbs and makes this available for searching tool.

(Return to MediaWiki_based_ingest_tool)