Implementation details of Java-based Fedora Ingestion code

Contents

Implementation Phase

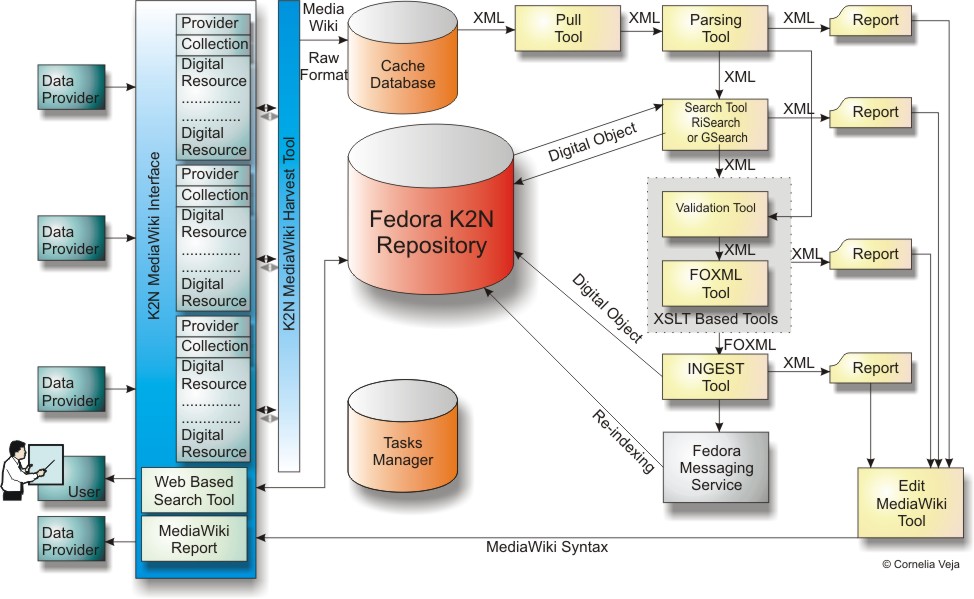

Harvest Tool

- A harvest tool to recurrently poll the MediaWiki address looking for new online medatada since the last ingest;

Parsing Tool

- A parsing tool, which download new metadata. This tool performs a simple syntactical analysis in order to generate a FOXML file as correct as possible.

PID Gerator

A PID Generator, to establish the object identifier based on the MD5 checksum algorithm, calculated against some metadata prepared for ingestion as follows:

- For the last version of Fedora Ingest Service, the PID for provider will be as follows:

"K2N" namespace+"Provider_"+MD5-hash applied to BaseURL+PageName, where BaseURI represent MediaWiki URI base for provider's MediaWiki page (PageName). This algorithm is meant to ensure the PID persistence over time and oneness as well.

- For the latest version, will consider for collection resources as follows:

K2N:namespace+"Collection_"+MD5-hash applied to BaseURL+Collection_Page. If the collection has a ResourceID already designated by provider, MD5-hash will applied to ResourceID+BaseURL+Collection_Page.

- For collection member objects, the latest version of Fedora Ingest Service will consider PID following the hereinbefore mode:

"K2N:"+x+"_"+MD5-hash applied to ResourceID(if exists)+Collection_Page+BaseURL+Provider_Page. If Resource_ID is not provided, Best_Quality_URI will be used instead.

Search Tool

A Search Tool is looking for already ingested objects. This tool is based on SOAP clients (Fedora API-A, API-M and GSearch SOAP Client).

Using SPARQL and GSearch for Collection Retrieving

When a collection page is updated or deleted in mediawiki, a previous search against K2N Fedora Commons Repository, is needed, in order to determine if this collection was already ingested or not. At the technical level, exists two options to accomplish this:

- GSearch was pick up to accomplish this operation, as a first option. But GSearch is a little lazy in searching against relation external, and too verbose in results.

- SPARQL, the query language on the semantic web, as the second option. The results are expressed in SPARQL too.

The following query return all members for a specified collection (@coll@) and provider (@prov@):

select ?x from <#ri>

where {

?x <fedora-rels-ext:isMemberOf> <fedora:@coll@> .

?x <fedora-rels-ext:serviceProvidedBy> <fedora:@prov@> .

}

where "fedora-rels-ext" represents alias for "info:fedora/fedora-system:def/relations-external#" and "fedora" represents alias for "info:fedora/". The result, expressed in SPARQL looks as follows:

<sparql>

<head>

<variable name="x"/>

</head>

<results>

<result>

<x uri="info:fedora/K2N:StillImage_G_94288"/>

</result>

<result>

<x uri="info:fedora/K2N:StillImage_G_94289"/>

</result>

<result>

<x uri="info:fedora/K2N:StillImage_G_94290"/>

</result>

.....

</results>

</sparql>

During the tests, the second method was proved to be more reliable and rapid that the first one and the result is less verbose then in the GSearch usage. But we still keep both methods, and the effective usage of one or other method could be configured by setting a parameter in the main application configuration file.

- Observation. ASK query return "false" all the time. This was reported as bug in Fedora Commons Developers Community.

Validation and FOXML Generator Tool

This page describe the Validation and FOXML generator tool, destinated to preparse FOXML files for ingest and establish the relationships between digital objects.

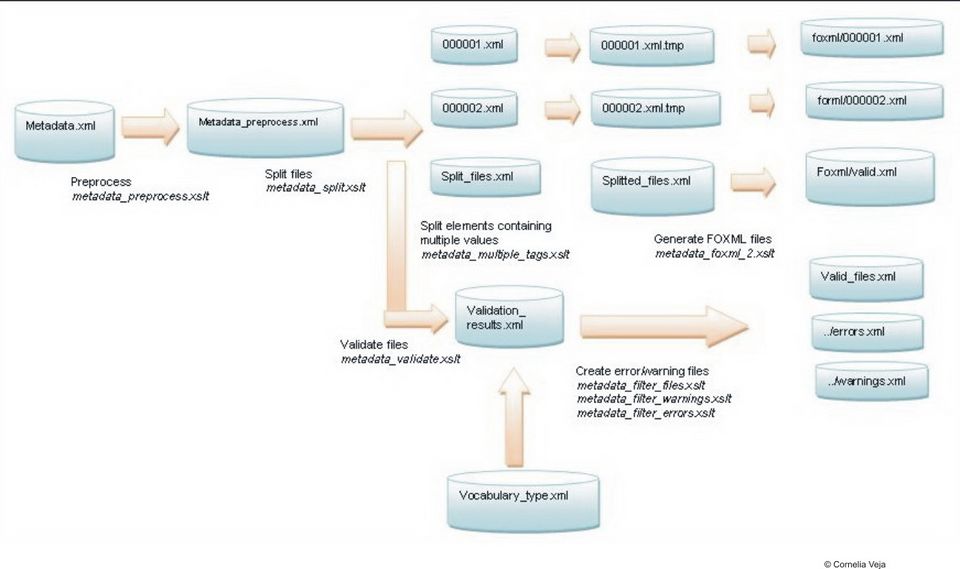

XSLT Transformation and Validation Process Description

- Steps:

- Preprocess - takes the metadata file and applies some preprocessing (converts content of Language, Metadata_Language, Country_Codes elements to lower case);

- Split files - splits the files containing several metadata sets into several smaller files (000001.xml, ...) , each file containing one metadata set;

- Validate files - takes each small file and validates it and produces an xml file containing the validation report. In this stage, it could be used a Vocabulary, defined for all metadata that could have a controlled vocabulary. This vocabulary will be defined for separate, for each metadata, which can require limited and exact values. For each metadata element, a separate xml file named: metadata_name.vocabulary.xml should be used.

- Create error/warning files – takes the validation report file and creates two xml files: errors.xml and warnings.xml + an xml file valid_files.xml containing the list of valid files that can be processed further (didn’t contain any errors).

- Split elements containing multiple values - splits the elements containing multiple values into an several element for each value;

- Generate FOXML files – generates the foxml files from the xml files.

Effective Ingest Tool

- Ingest tool;

MediaWiki Report Tool

- A MediaWiki editing tool used to write messages for metadata providers. The outcome consists of MediaWiki pages, linked to existing provider/collection pages.

Workflow

- metadata providers insert metadata in the K2N MediaWiki, using a template;

- Metadata are pulled from MediaWiki in a cache database;

- The ingest tool is "new metadata sensitive", ingests this new metadata and creates new Fedora digital objects;

- The GSearch indexing service is triggered via Fedora’s messaging service and adds the new digital objects to it’s indexes;

- If existing, the messages for providers could be shown on a page with the following path: Collection/Metadata_Aggregation_Report;

- The search tool, based on the GSearch engine, is able to retrieve new digital objects after every new ingest.

(Return to MediaWiki_based_ingest_tool)